File Based Loader is the inbound integration tool. Oracle has also HR2HR but that is for integration between EBS/PSFT and Fusion. Let's see what are these two.

HR2HR:

HR2HR is designed to allow a source system of record (typically one of the applications unlimited products) to co-exist alongside a target Fusion system, allowing data to be iteratively transferred from the source to the target ensuring that Fusion is synchronized with the system of record. Typically this is used by customers who want to take advantage of new functionality provided in the Fusion Talent, Compensation, Core HR offerings, whilst maintaining their source system as the system of record for reference and employee data.

In HR2HR there are predefined extract mechanisms that are built for EBS and PSFT. These generate a snapshot of the data that exists in the system of record. This is then moved onto an SFTP server and Fusion is notified via a webservice call that such a file is ready for processing. Fusion then retrieves the file from the SFTP server, unzips it and initiates processing. In the 1st stage of processing it is passed through an ODI layer where the data is compared against the existing Fusion data and a series of records is written to the staging tables that represent the specific actions that need to be taken on particular data objects in order to synchronize them with the system of record. These changes might be creates, updates, deletes or data effective changes.

File Based Loader:

File Based Loader is designed to support the same set of objects as HR2HR but where there is not a predefined source system of record. The intention is that this will be used by customers who are only performing an initial data load and who want to bulk load a large volume of data including history. Unlike HR2HR, File Loader simply takes the data from the zip files and loads it directly into the data loading stage tables rather than going through an ODI layer. It then processes the staging data to resolve the primary and foreign key references.

Because File Loader does not have a dependency on ODI it has been possible to extend the number of attributes that are supported within each object, and so for example descriptive flexfield columns are supported in File Loader whereas they are not available in HR2HR.

However, as File Based Loader does not have any pre-defined extracts to populate the data files it is necessary for implementation teams to develop their own extract mechanisms and to ensure that the data is in a form that will satisfy the Fusion business rules.

File Loader supports both data creation and updates. It also supports the full creation of history as well as processing terminated work relationships.

So in short

HR2HR:

HR2HR is designed to allow a source system of record (typically one of the applications unlimited products) to co-exist alongside a target Fusion system, allowing data to be iteratively transferred from the source to the target ensuring that Fusion is synchronized with the system of record. Typically this is used by customers who want to take advantage of new functionality provided in the Fusion Talent, Compensation, Core HR offerings, whilst maintaining their source system as the system of record for reference and employee data.

In HR2HR there are predefined extract mechanisms that are built for EBS and PSFT. These generate a snapshot of the data that exists in the system of record. This is then moved onto an SFTP server and Fusion is notified via a webservice call that such a file is ready for processing. Fusion then retrieves the file from the SFTP server, unzips it and initiates processing. In the 1st stage of processing it is passed through an ODI layer where the data is compared against the existing Fusion data and a series of records is written to the staging tables that represent the specific actions that need to be taken on particular data objects in order to synchronize them with the system of record. These changes might be creates, updates, deletes or data effective changes.

File Based Loader:

File Based Loader is designed to support the same set of objects as HR2HR but where there is not a predefined source system of record. The intention is that this will be used by customers who are only performing an initial data load and who want to bulk load a large volume of data including history. Unlike HR2HR, File Loader simply takes the data from the zip files and loads it directly into the data loading stage tables rather than going through an ODI layer. It then processes the staging data to resolve the primary and foreign key references.

Because File Loader does not have a dependency on ODI it has been possible to extend the number of attributes that are supported within each object, and so for example descriptive flexfield columns are supported in File Loader whereas they are not available in HR2HR.

However, as File Based Loader does not have any pre-defined extracts to populate the data files it is necessary for implementation teams to develop their own extract mechanisms and to ensure that the data is in a form that will satisfy the Fusion business rules.

File Loader supports both data creation and updates. It also supports the full creation of history as well as processing terminated work relationships.

So in short

- Use FBL to Load HR Data from your legacy system to Fusion HCM

- It is a flat file based data upload utility

- It validates and loads data into Fusion

- It supports error correction

- It loads complex data objects with full date effective history

- You can use multiple data sources to prepare the data file

- Can be used as Application to Application Integration

- Can be used as Conversion Tool

- Can be used for on going data sync

- It is mainly used for Bulk data loading

- Can be used to update business objects provided they were created using FBL. At the end of this series I will show that data created in Fusion through UI can not be updated using FBL.

How File-Based Loader Works

File-Based Loader uses the Oracle Fusion HCM Load Batch Data process to load your source data to the Oracle Fusion application tables. Load Batch Data is a generic utility for loading data to Oracle Fusion from external sources; for example, it is used by Oracle Fusion Coexistence for HCM, the Oracle Fusion Enterprise Structures Configurator (ESC), and the Oracle Fusion HCM Spreadsheet Data Loader. A key feature of Load Batch Data is that data is imported initially in object-specific batches to stage tables. Data is prepared for load in the stage tables and then loaded from the stage tables to the Oracle Fusion application tables.

File-Based Loader uses the Oracle Fusion HCM Load Batch Data process to load your source data to the Oracle Fusion application tables. Load Batch Data is a generic utility for loading data to Oracle Fusion from external sources; for example, it is used by Oracle Fusion Coexistence for HCM, the Oracle Fusion Enterprise Structures Configurator (ESC), and the Oracle Fusion HCM Spreadsheet Data Loader. A key feature of Load Batch Data is that data is imported initially in object-specific batches to stage tables. Data is prepared for load in the stage tables and then loaded from the stage tables to the Oracle Fusion application tables.

For this process to succeed, you need to deliver your data to File-Based Loader in a format that satisfies Oracle Fusion business rules. The Oracle Fusion Human Capital Management File-Based Loader V1.1 User’s Guide explains how to meet this requirement. The MOS note is Oracle Fusion Human Capital Management File-Based Loader V1.1 [ID 1533860.1]

What are other tools available

Oracle has a documented these under HCM SaaS Integrations. Here is the slide which talks about that

What is the latest version of FBL

The latest FBL version is FBL1.1 and is available from 11.1.5.0.0.

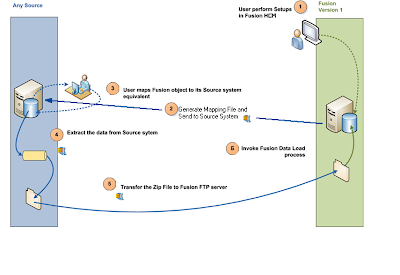

End to End Picture

This picture shows the full flow of FBL data loading process. The process starts from Fusion HCM. In order to successfully load data you need to perform the following steps.

1.User Performs Steups in Fusion HCM - Select and Configure Offerings like Workforce Deployment, Workforce Compensation depending on your requirements and business process flow. Create Implementation Projects and complete all the configuration tasks.

2. Generate Mapping File and Send to Source System - Once the configuration steps are completed you need to create reference mapping file which will be used while creating actual data files. In order to do that you need to first specify the configuration parameters. Go to Administration --> Setup and Maintainace.

Click on All task tab and search for task Manage HCM Configuration for Coexistence as shown

Click on Go to Task. Which will open the screen like the next picture

Click on Detach to see the full table. Specify the values of all the parameters. Below are the details of the parameters. Depending on your version you will see some extra parameters. For me it is 11.1.4.0.0 and FBL 1.0

Click on All task tab and search for task Manage HCM Configuration for Coexistence as shown

Click on Go to Task. Which will open the screen like the next picture

| PARAMETER NAME | PARAMETER VALUE |

| Environment Properties File | /u01/APPLTOP/instance/ess/config/environment.properties |

| HRC Product Top | /u01/APPLTOP/fusionapps/applications/hcm/hrc |

| Load HCM Data Files Automatically | N |

| Loader Cache Clear Limit | 99 |

| Loader Chunk Size | 200 |

| Loader Maximum Errors | 100 |

| Loader Number of Processes | 1 |

| Loader Save Size | 1 |

| ODI Context | DEVELOPMENT |

| ODI Log Level | 2 |

| ODI Language | AMERICAN_AMERICA.WE8ISO8859P1 |

| ODI Password | (As supplied by Oracle) |

| ODI Root Directory | /u01/oracle/ODI_FILE_ROOT_HCM |

| ODI User | FUSION_APPS_HCM_ODI_SUPERVISOR_APPID |

| ODI Work Repository | FUSIONAPPS_WREP |

| On Demand FTP Root Directory | /u01/oracle/ODI_FILE_ROOT_HCM/ftp_inbox |

| Use Python Loader | Y |

| User Name Expression | Optionally, you can specify one of: |

| loaderCtx.getPersonAttr(“PersonNumber”) | |

| loaderCtx.getAssignmentAttr(“AssignmentNumber”) |

Note: If you have on-premise customer then replace APPLTOP with the actual path. Specify ODI password. All of these will be provided by your DBA who installed Fusion Apps.

Your parameters should look like this

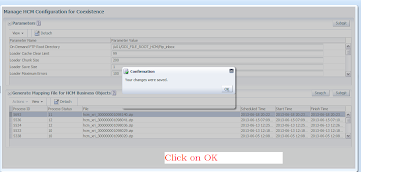

Click on Submit as shown in the next picture to save this Configuration in the system.

Once you click you submit it will give you Confirmation and click on OK.

Now that configuration parameters are set and also Offering configurations are complete we need to generate the mapping file. From the same screen click on Submit from within Generate Mapping File for HCM Business Objects as shown

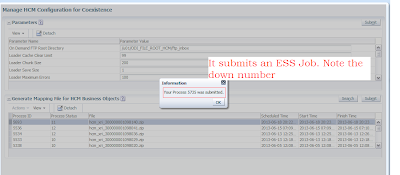

It will submit an Enterprise Schedular Job. Note down the job number. We may need this to debug and find your corresponding zip file name.

Click on refresh to check the status. If the process status is 12 that means your file has been successfully created. Any other status means you have some problems. To find out exact cause you have to check the ESS log from Navigator --> Tools --> Scheduled Process. If you don't find meaningful error detail then you have to check in Fusion HCM Domain EM console. I will explain in a separate post about how to check log files from EM. Once completed note the zip file name and log into unix box and go to ODI Root Directory/E_1

For Example the process ended in status 12 as shown.

For me the ODI root/E_1 is

/u01/ODI_FILE_ROOT_HCM/E_1. For SaaS customer this will done by Oracle or they can give you access where all these files will be copied.

Now use any FTP tool to copy the file. As shown in the picture it creates a separate folder for each run. The name is same as the numeric part of the zip file.

I used WinSCP to copy the file from unix server to my local machine.

You can now see the file in your local machine.

Unzip the file and you can see list of cross reference files generated by Fusion.

In my example I will show how to upload Location in fusion. And all the locations which I will upload will be associated with Common Set ID so that the locations are available for all Legal Entities.

You can upload all these files and store them in mapping tables using SQL*Loader. While writing extraction script you can dynamically get the information from there and create data files.

So till now I have explained

Now that configuration parameters are set and also Offering configurations are complete we need to generate the mapping file. From the same screen click on Submit from within Generate Mapping File for HCM Business Objects as shown

It will submit an Enterprise Schedular Job. Note down the job number. We may need this to debug and find your corresponding zip file name.

Click on refresh to check the status. If the process status is 12 that means your file has been successfully created. Any other status means you have some problems. To find out exact cause you have to check the ESS log from Navigator --> Tools --> Scheduled Process. If you don't find meaningful error detail then you have to check in Fusion HCM Domain EM console. I will explain in a separate post about how to check log files from EM. Once completed note the zip file name and log into unix box and go to ODI Root Directory/E_1

For Example the process ended in status 12 as shown.

For me the ODI root/E_1 is

/u01/ODI_FILE_ROOT_HCM/E_1. For SaaS customer this will done by Oracle or they can give you access where all these files will be copied.

Now use any FTP tool to copy the file. As shown in the picture it creates a separate folder for each run. The name is same as the numeric part of the zip file.

I used WinSCP to copy the file from unix server to my local machine.

You can now see the file in your local machine.

Unzip the file and you can see list of cross reference files generated by Fusion.

In my example I will show how to upload Location in fusion. And all the locations which I will upload will be associated with Common Set ID so that the locations are available for all Legal Entities.

You can upload all these files and store them in mapping tables using SQL*Loader. While writing extraction script you can dynamically get the information from there and create data files.

So till now I have explained

- What is HR2HR?

- What is FBL and how does it work?

- How to configure Fusion to start using FBL?

- How to generate Cross Reference Files?

- How to get that from Unix and save it to Local machine?

In the following two series I have explained how to create data file for Location Business Object and how to register the file in Fusion for processing:

----------Updated on 7th July 2013 with link of the remaining two series...

----------Updated on 7th July 2013 with link of the remaining two series...

Cheers.....................................

Hi,

ReplyDeleteCould you please provide the cross refernce data files for the referrence.

Thank You,

Srini.

Dear Mehabub Sheikh,

ReplyDeleteI have tried to create the referrence files using the Manage HCM Configuration for Coexistence. i could not get the files but i have the E_1 > Stage folders created but no data files. we are on RUP3 on premise. Please let me know what could be the problem. Your help is highly needed.

Thanks in advance!

Srinivasulu Kamineni.

Hi Srinivasulu,

ReplyDeleteDid you check the process status?

It should be 12.

If it is not then it has ended in error. Check the ESS log file for the process id in HCM Domain EM.

Thanks,

Mehabub

Dear Mehabub,

ReplyDeleteProcess went on error. when i see the log going through Tools > Schedule Process i do not have much information just the below.

Extracting Cross Reference Data for enterprise 1.

could you please let me know how to see the complete log.

Thanks in advance!

Srinivasulu.

Hi Mehabub,

ReplyDeleteWhen I submit an ESS Job it return status 10. Then I check ESS log file in HCM Domain, it say:

Message ID ESS-07004

Execution error for request 1204. Reason: ODI-1351: An unexpected error was thrown during execution of the scenario 'HCM_BL_MAINT_EXPORT_REFERENCES - 001'

And there is detail in ODI Session

Return Code 7000

Message ODI-1226: Step Export SETID_SETS References fails after 1 attempt(s). ODI-1240: Flow Export SETID_SETS References fails while performing a Integration operation. This flow loads target table XR_SETID_SET. ODI-1228: Task Export SETID_SETS References (Integration) fails on the target FILE connection FILE_OUTPUT_HCM. Caused By: java.sql.SQLException: ODI-40449: File not found: /tmp/ODI_IN/export/XR_SETID_SET.dat at com.sunopsis.jdbc.driver.file.FileTruncator.truncateFile(FileTruncator.java:40) at com.sunopsis.jdbc.driver.file.impl.commands.CommandTruncate.execute(CommandTruncate.java:39) at com.sunopsis.jdbc.driver.file.CommandExecutor.executeCommand(CommandExecutor.java:33) at com.sunopsis.jdbc.driver.file.FilePreparedStatement.execute(FilePreparedStatement.java:178) at oracle.odi.runtime.agent.execution.sql.SQLCommand.execute(SQLCommand.java:163) at oracle.odi.runtime.agent.execution.sql.SQLExecutor.execute(SQLExecutor.java:102) at oracle.odi.runtime.agent.execution.sql.SQLExecutor.execute(SQLExecutor.java:1) at oracle.odi.runtime.agent.execution.TaskExecutionHandler.handleTask(TaskExecutionHandler.java:50) at com.sunopsis.dwg.dbobj.SnpSessTaskSql.processTask(SnpSessTaskSql.java:2913) at com.sunopsis.dwg.dbobj.SnpSessTaskSql.treatTask(SnpSessTaskSql.java:2625) at com.sunopsis.dwg.dbobj.SnpSessStep.treatAttachedTasks(SnpSessStep.java:580) at com.sunopsis.dwg.dbobj.SnpSessStep.treatSessStep(SnpSessStep.java:468) at com.sunopsis.dwg.dbobj.SnpSession.treatSession(SnpSession.java:2128) at com.sunopsis.dwg.dbobj.SnpSession.treatSession(SnpSession.java:1931) at oracle.odi.runtime.agent.processor.impl.StartScenRequestProcessor$2.doAction(StartScenRequestProcessor.java:580) at oracle.odi.core.persistence.dwgobject.DwgObjectTemplate.execute(DwgObjectTemplate.java:216) at oracle.odi.runtime.agent.processor.impl.StartScenRequestProcessor.doProcessStartScenTask(StartScenRequestProcessor.java:513) at oracle.odi.runtime.agent.processor.impl.StartScenRequestProcessor$StartScenTask.doExecute(StartScenRequestProcessor.java:1073) at oracle.odi.runtime.agent.processor.task.AgentTask.execute(AgentTask.java:126) at oracle.odi.runtime.agent.support.DefaultAgentTaskExecutor$1.run(DefaultAgentTaskExecutor.java:50) at org.springframework.core.task.SyncTaskExecutor.execute(SyncTaskExecutor.java:50) at oracle.odi.runtime.agent.support.DefaultAgentTaskExecutor.executeAgentTask(DefaultAgentTaskExecutor.java:41) at oracle.odi.runtime.agent.processor.TaskExecutorAgentRequestProcessor.doExecuteAgentTask(TaskExecutorAgentRequestProcessor.java:93)

I don't know how to fix it

hi can i get a document on file based loader i ama fresher please help me

ReplyDeletehi mehboob, Can you please let us know your email id for any communication purpose with you ? Thanks Prashant

ReplyDeletesalam mehabub,

ReplyDeleteI have tried generating the CR file and it finished with the status 12. but I don't know how to access the server to get the CR file. Please help me. I have tried HCM SFTP User & Domain SFTP User Logins, the file is not there

Hello, This article is very helpful for someone who wants to understand the FBL process end to end. I have a great time reading this artcile. I have a questiion for you. Once we generate the cross reference file if you open the file say XR_person_type.dat, in that file there are 5 different fields right? Fusion GUID, FUSION Key, PeopleSoft Key, Description and Description2. What I noticed in all the files is that all fields are being populated with some value but how come for the Field PeoplSoft key no value is being generated in any file. When I look in the Oracle reference manual it says that the value in this field is used by the source systrem like PeopleSoft and yet none of the files have a value for this field? could you please help me understand this part?

ReplyDeleteNice article this article is very helpful to me.I have a great time reading this artcile.

ReplyDeletehttp://www.erptree.com/course/oracle-fusion-hcm-training/

Thanks for sharing this informative blog. This is really useful for me to make a bright career........Oracle Fusion HCM Technical Training

ReplyDelete