One of the limitations(till 11.1.4) of the FBL is that you can not update records created using other tools or application UIs.This is because FBL internally uses Key Map table to mark a record for Create if it does not find the corresponding Fusion ID for the GUID provided in the data file. If it finds the same it marks the record as Update. Basically FBL load the data file to load batch data staging tables. Now when a record is imported to the Load Batch Data stage tables, the import process compares the record’s source GUID and object-type values with values in the Key Mapping table:

In release 11.1.5.0.0 oracle has introduced an extract Business Object Key Map through which you can generate the GUIDs for the records which are not created using FBL and populate the same in the Key Map table. Through the extract you can also generate the file with GUIDs and import the same in the target system. You can then take the GUIDs and create your own data file to update those records. In this post I will explain how to generate the GUIDs and populate the same in the Key Map table.

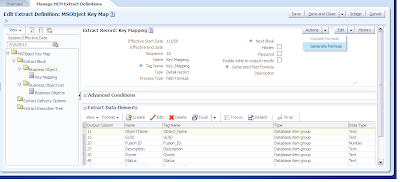

If you are on Release 5 then check whether the extract is available under Navigator --> Data Exchange-->Manage HCM Extract Definitions as shown

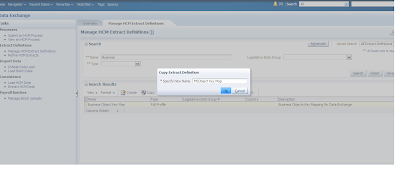

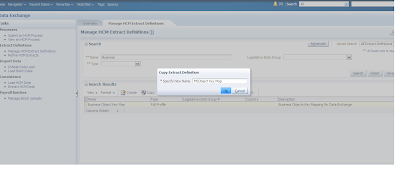

Search with Business. Now Oracle strongly suggests to create a copy of this extract and do all modifications based on your requirement. So let's follow this. Click on Copy and give a name to your custom Extract say MSObject Key Map as shown. Click Ok.

Now let us configure the parameters that will passed to this extract when it will be submitted. Navigate to Payroll Checklist using Navigator --> Checklist (under Payroll menu)

All extracts are defined as flow patterns. Search your extract, select and then click on edit icon. Navigate to Parameters tab

Select Effective Date and click on Edit icon.

Specify the following

Display : No

Parameter Basis: Post SQL Bind

Basis value : select TO_CHAR(SYSDATE,'YYYY-MM-DD') from dual

Click on Save

Select Business Objects and click on Edit icon and specify the following

Display Format: Lookup Choice List

Lookup: HRC_LOADER_BO_EXTRACT

Sequence: 220

Click on Save

Select Extract Type and click on Edit icon and specify the following

Display Format: Lookup Choice List

Lookup: HRC_LOADER_BO_EXTRACT_TYPE

Sequence: 210

Click on Save

The sequence value of Business Object must be greater than that of Extract Type.

Select Start Date and Click on Edit icon

Specify the following:

Display: No

Click on Save and then Submit.

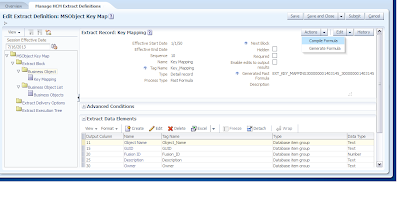

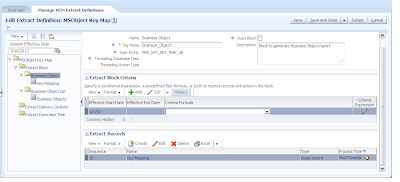

Now let us configure the extract so that we can run it. For this go to Navigator-->Data Exchange

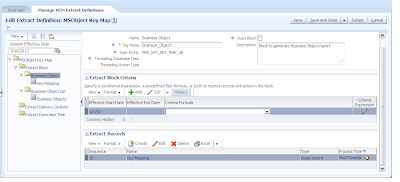

Click on Manage Extract Definitions from the regional area and search your extract. Select the one and click Edit icon

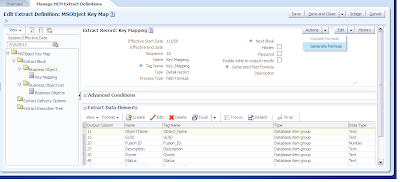

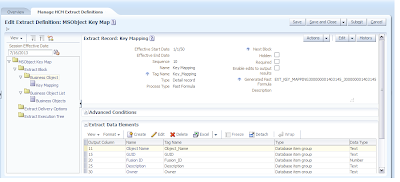

Click on Business Object block. In the Extract Records section click on Key Mapping

Click on Business Object block. In the Extract Records section click on Key Mapping

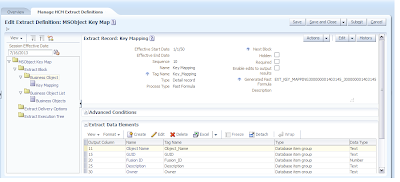

Click Actions drop down and select Generate Formula

Once the formula has been generated Click on Actions drop down and select Compile Formula

Click on Save and Close.Click Business Object Block again to verify the formula status. Ensure that Fast Formula is compiled indicated by a green tick.

Click on Business Object List. In the Extract Records section select Business Objetcs

From the Actions drop down select Generate Formula and then once the formula is generated click on Compile Formula from the same Actions drop down list.

Click Save and Close. Again select Business Object List and verify the Fast Formula is compiled indicated by green check

Click on Save and Close. This completes the extract setup. Now let's run this extract.

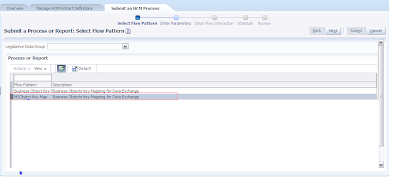

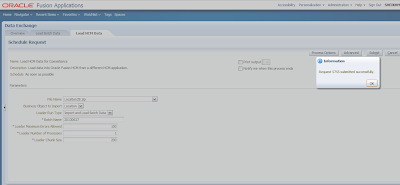

To do this click on Submit an HCM Process as shown next

Select your Extract and click on Next.

Give an name to your extract flow instance and select the Extract type and Business objects. Click on Next, Next and then Submit

Business Object Key Map Extract Parameters

Business Object Key Map extract process has the following parameters –

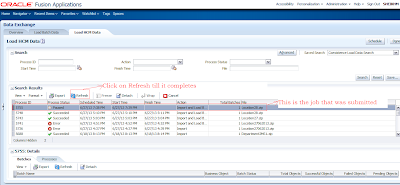

Click on Refresh icon to see whether the process has completed.

In order to see the output you need to have a BI Publisher report. I will explain the same in the next post.

In summary you now know how to generate Key Mapping information using Business Object Key Map HCM extract.

Cheers....................

- If the values exist in the Key Mapping table, then the process replaces the source GUID in the stage tables with the Oracle Fusion ID.

- If the values do not exist in the Key Mapping table, then an Oracle Fusion ID is generated for the record, recorded in the Key Mapping table, and used to replace the source GUID in the stage tables.

In release 11.1.5.0.0 oracle has introduced an extract Business Object Key Map through which you can generate the GUIDs for the records which are not created using FBL and populate the same in the Key Map table. Through the extract you can also generate the file with GUIDs and import the same in the target system. You can then take the GUIDs and create your own data file to update those records. In this post I will explain how to generate the GUIDs and populate the same in the Key Map table.

If you are on Release 5 then check whether the extract is available under Navigator --> Data Exchange-->Manage HCM Extract Definitions as shown

Search with Business. Now Oracle strongly suggests to create a copy of this extract and do all modifications based on your requirement. So let's follow this. Click on Copy and give a name to your custom Extract say MSObject Key Map as shown. Click Ok.

Now let us configure the parameters that will passed to this extract when it will be submitted. Navigate to Payroll Checklist using Navigator --> Checklist (under Payroll menu)

All extracts are defined as flow patterns. Search your extract, select and then click on edit icon. Navigate to Parameters tab

Select Effective Date and click on Edit icon.

Specify the following

Display : No

Parameter Basis: Post SQL Bind

Basis value : select TO_CHAR(SYSDATE,'YYYY-MM-DD') from dual

Click on Save

Select Business Objects and click on Edit icon and specify the following

Display Format: Lookup Choice List

Lookup: HRC_LOADER_BO_EXTRACT

Sequence: 220

Click on Save

Select Extract Type and click on Edit icon and specify the following

Display Format: Lookup Choice List

Lookup: HRC_LOADER_BO_EXTRACT_TYPE

Sequence: 210

Click on Save

The sequence value of Business Object must be greater than that of Extract Type.

Select Start Date and Click on Edit icon

Specify the following:

Display: No

Click on Save and then Submit.

Now let us configure the extract so that we can run it. For this go to Navigator-->Data Exchange

Click on Manage Extract Definitions from the regional area and search your extract. Select the one and click Edit icon

Click on Business Object block. In the Extract Records section click on Key Mapping

Click on Business Object block. In the Extract Records section click on Key Mapping

Click Actions drop down and select Generate Formula

Once the formula has been generated Click on Actions drop down and select Compile Formula

Click on Save and Close.Click Business Object Block again to verify the formula status. Ensure that Fast Formula is compiled indicated by a green tick.

Click on Business Object List. In the Extract Records section select Business Objetcs

From the Actions drop down select Generate Formula and then once the formula is generated click on Compile Formula from the same Actions drop down list.

Click Save and Close. Again select Business Object List and verify the Fast Formula is compiled indicated by green check

Click on Save and Close. This completes the extract setup. Now let's run this extract.

To do this click on Submit an HCM Process as shown next

Select your Extract and click on Next.

Give an name to your extract flow instance and select the Extract type and Business objects. Click on Next, Next and then Submit

Business Object Key Map Extract Parameters

Business Object Key Map extract process has the following parameters –

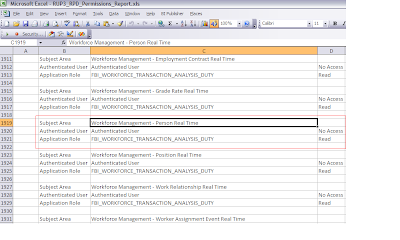

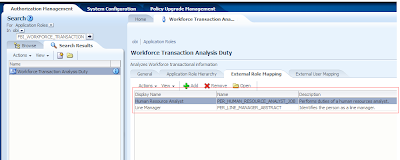

- Extract Type – Extract type has the following options Parameter Description

- Key Map - Select this option to generate a report of the existing values in the Key Map table.

- Global Unique Identification Generation and Key Map - Select this option to create GUID values for those business objects that do have a Key Map entry and to generate the report.

- Business Object –

- List of Business Objects for which the Key Map Report/GUIDs to be generated.

- If Business Object is left blank the extract will be run for all the Business Objects.

Click on Refresh icon to see whether the process has completed.

In order to see the output you need to have a BI Publisher report. I will explain the same in the next post.

In summary you now know how to generate Key Mapping information using Business Object Key Map HCM extract.

Cheers....................