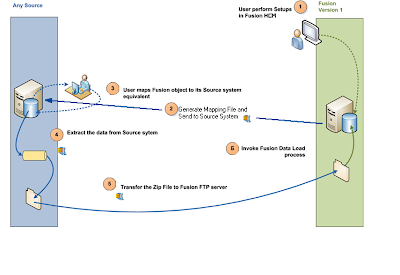

In the first two blog posts (PART I & PART II) I have explained how to create HCM Business Objects Mapping file and use this file to create data file for loading into Fusion. I also explained how to create the zip file and register that using composite. Now as I mentioned in the last post that when you call and submit the composite to register the file, the composite actually moves the file to a path and then call hr2hrSqlldr.py python script to load the data into staging tables.

Let's see what are the series of actions it does. If you open the python script you will find that it first checks what are all the input details provided by the user when he/she called the composite from a table named HRC_XFER_JOB_PARAMS. From this table it gets the list of LBOs that were loaded in the zip file. Now user may load all or few LBOs which are supported by FBL. In order to maintain the data integrity it has to load the LBOs in a a pre-defined sequence. To do this it gets the processing sequence from a table HRC_LOADER_METADATA_REGISTRY. The next screen shows the loading sequence from this table.

LBOs are processed starting with the one having the largest processing_order value. Oracle generates separate JOB IDs for each of the top level LBOs which were specified in the composite parameters. Then it creates SQL*Loader Control file for loading data into HRC_LOADER_BATCH_HEADER and HRC_LOADER_JOB tables and sets the LINE_STATUS = 'Ready To Process'. Once this is done it gets all the data files for each of the LBOs and then creates SQL*Loader Control file dynamically for them to insert into HRC_LOADER_BATCH_LINES_SRC table. Now once all data files have been imported into the table, it then calls a PL/SQL procedure HRC_SQLLOADER.PROCESS_BATCH_TRANSFORM to do the data transformation for each of the top level LBOs.

Now for some reasons it does not insert data into those tables in my instance. Don't know why. May be I might be doing something worng. So I logged SR with Oracle and initial response was that our version(11.1.4.0.0) is not the latest one where FBL works as expected. Let's do this by running Load HCM Data

Click on Load HCM Data under Coexistence.

Click On Schedule and select the file you loaded. I have already a loaded file Location28.zip. So let me show you the steps with this file.

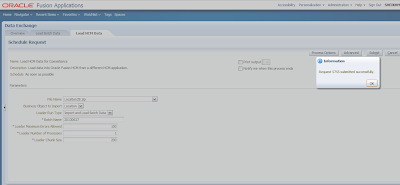

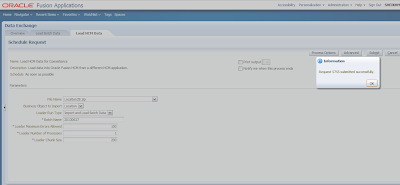

Select the file, LBO and run mode. Now here I want to do - Import into staging tables and then load them to base tables in a single run. So I selected Import and Load Batch Data. You can do Import first and then load them through Load Batch Data process. Click on submit.

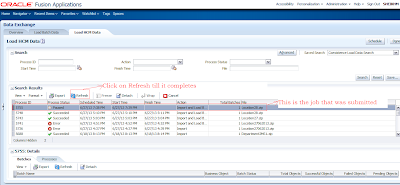

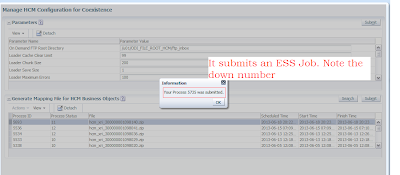

It submits an ESS job which them submits child processes.

Click Refresh to see whether the process is completed.

Click Processes tab to see whether it has any error.

Click on the Batch Name Link as shown.

As can be seen that it has one record and it failed. Click on the 1 under Failed Objects.

Once you do that it opens a screen which looks like this. Expand Location LBO and select the data to see the error.

Here the error is 'Location code already exists'. I intentionally took this address to show the various features. Basically I created a Location from Fusion Application Navigator-->Workforce Structures->Manage Location.

This picture shows the error details.

I used that location code to create data file. Wanted to explore whether it is possible to update the data which are not created by FBL. So you can see with this process you can not update data which are not created by FBL. Recently in release 5 onwards Oracle has introduced Business Object Key Map HCM Extract to generate the GUIDs for business objects created in application manually. Will explain the process of doing that in a separate post after completing this series.

Many of ou may be wondering why did it try to insert in stead of update.

Oracle Fusion HCM maintains a Key Mapping table HRC_LOADER_BATCH_KEY_MAP that records, for each business object, its type, source GUID, and Oracle Fusion ID (the ID by which it is identified in Oracle Fusion). For example:

OBJECT TYPE SOURCE GUID ORACLE FUSION ID

--------------- --------------- ----------------------

Person PERS123 006854

Person PERS456 059832

When a record is imported to the Load Batch Data stage tables, the import process compares the record’s source GUID and object-type values with values in the Key Mapping table:

Let's see this process. I am going to update the Location code from the application.

The record details.

MEHABUB_LOC is the location which is there in the system. Let's change this to MEHABUB_LOC1

Then set the Batch status to 'Ready to Process'

Rerun the Load Batch Data Process.

Click on Run.

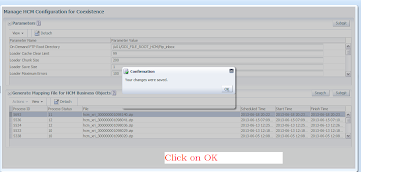

Click on Submit.

Note down the Process ID. This is nothing but ESS job.

Wait till the process is completed.

Now you can see that it has created MEHABUB_LOC1 location in fusion.

This picture shows that the location is now available in application. I am going to use FBL to update the address details of this location.

I just changed the address details keeping location code and GUID same and created a new data file Location29.zip and called the composite

This picture shows the composite call parameter details.

Composite Flow.

Submit Load HCM Data with Import and Load Batch Data for the file Mehabub29.zip.

ESS Job id.

Wait for the process to complete.

All process completed successfully.

This shows that one object has been changed.

Let's check from the application.

The marked address is what I changed in my data file.

So this completes the series of FBL data loading as a conversion tool.

In summary from this post you know how

Cheers........................

Let's see what are the series of actions it does. If you open the python script you will find that it first checks what are all the input details provided by the user when he/she called the composite from a table named HRC_XFER_JOB_PARAMS. From this table it gets the list of LBOs that were loaded in the zip file. Now user may load all or few LBOs which are supported by FBL. In order to maintain the data integrity it has to load the LBOs in a a pre-defined sequence. To do this it gets the processing sequence from a table HRC_LOADER_METADATA_REGISTRY. The next screen shows the loading sequence from this table.

LBOs are processed starting with the one having the largest processing_order value. Oracle generates separate JOB IDs for each of the top level LBOs which were specified in the composite parameters. Then it creates SQL*Loader Control file for loading data into HRC_LOADER_BATCH_HEADER and HRC_LOADER_JOB tables and sets the LINE_STATUS = 'Ready To Process'. Once this is done it gets all the data files for each of the LBOs and then creates SQL*Loader Control file dynamically for them to insert into HRC_LOADER_BATCH_LINES_SRC table. Now once all data files have been imported into the table, it then calls a PL/SQL procedure HRC_SQLLOADER.PROCESS_BATCH_TRANSFORM to do the data transformation for each of the top level LBOs.

Now for some reasons it does not insert data into those tables in my instance. Don't know why. May be I might be doing something worng. So I logged SR with Oracle and initial response was that our version(11.1.4.0.0) is not the latest one where FBL works as expected. Let's do this by running Load HCM Data

Click on Load HCM Data under Coexistence.

Click On Schedule and select the file you loaded. I have already a loaded file Location28.zip. So let me show you the steps with this file.

Select the file, LBO and run mode. Now here I want to do - Import into staging tables and then load them to base tables in a single run. So I selected Import and Load Batch Data. You can do Import first and then load them through Load Batch Data process. Click on submit.

It submits an ESS job which them submits child processes.

Click Refresh to see whether the process is completed.

Click Processes tab to see whether it has any error.

Click on the Batch Name Link as shown.

As can be seen that it has one record and it failed. Click on the 1 under Failed Objects.

Once you do that it opens a screen which looks like this. Expand Location LBO and select the data to see the error.

Here the error is 'Location code already exists'. I intentionally took this address to show the various features. Basically I created a Location from Fusion Application Navigator-->Workforce Structures->Manage Location.

This picture shows the error details.

I used that location code to create data file. Wanted to explore whether it is possible to update the data which are not created by FBL. So you can see with this process you can not update data which are not created by FBL. Recently in release 5 onwards Oracle has introduced Business Object Key Map HCM Extract to generate the GUIDs for business objects created in application manually. Will explain the process of doing that in a separate post after completing this series.

Many of ou may be wondering why did it try to insert in stead of update.

Oracle Fusion HCM maintains a Key Mapping table HRC_LOADER_BATCH_KEY_MAP that records, for each business object, its type, source GUID, and Oracle Fusion ID (the ID by which it is identified in Oracle Fusion). For example:

OBJECT TYPE SOURCE GUID ORACLE FUSION ID

--------------- --------------- ----------------------

Person PERS123 006854

Person PERS456 059832

When a record is imported to the Load Batch Data stage tables, the import process compares the record’s source GUID and object-type values with values in the Key Mapping table:

- If the values exist in the Key Mapping table, then the process replaces the source GUID in the stage tables with the Oracle Fusion ID.

- If the values do not exist in the Key Mapping table, then an Oracle Fusion ID is generated for the record, recorded in the Key Mapping table, and used to replace the source GUID in the stage tables.

Let's see this process. I am going to update the Location code from the application.

The record details.

MEHABUB_LOC is the location which is there in the system. Let's change this to MEHABUB_LOC1

Then set the Batch status to 'Ready to Process'

Rerun the Load Batch Data Process.

Click on Run.

Click on Submit.

Note down the Process ID. This is nothing but ESS job.

Wait till the process is completed.

Now you can see that it has created MEHABUB_LOC1 location in fusion.

This picture shows that the location is now available in application. I am going to use FBL to update the address details of this location.

I just changed the address details keeping location code and GUID same and created a new data file Location29.zip and called the composite

This picture shows the composite call parameter details.

Composite Flow.

Submit Load HCM Data with Import and Load Batch Data for the file Mehabub29.zip.

ESS Job id.

Wait for the process to complete.

All process completed successfully.

This shows that one object has been changed.

Let's check from the application.

The marked address is what I changed in my data file.

So this completes the series of FBL data loading as a conversion tool.

In summary from this post you know how

- FBL python script creates Control files

- Oracle derives the processing order of the LBOs

- Data transformation happens inside Fusion

- To Load data into staging tables

- To get the errors and fix them from Application

- To create data file records created with FBL to update

Cheers........................